Robot-assisted Data Visualisation

Looking at Robot Data Visualisation with an Interaction and Decision Making Perspective

Project Description

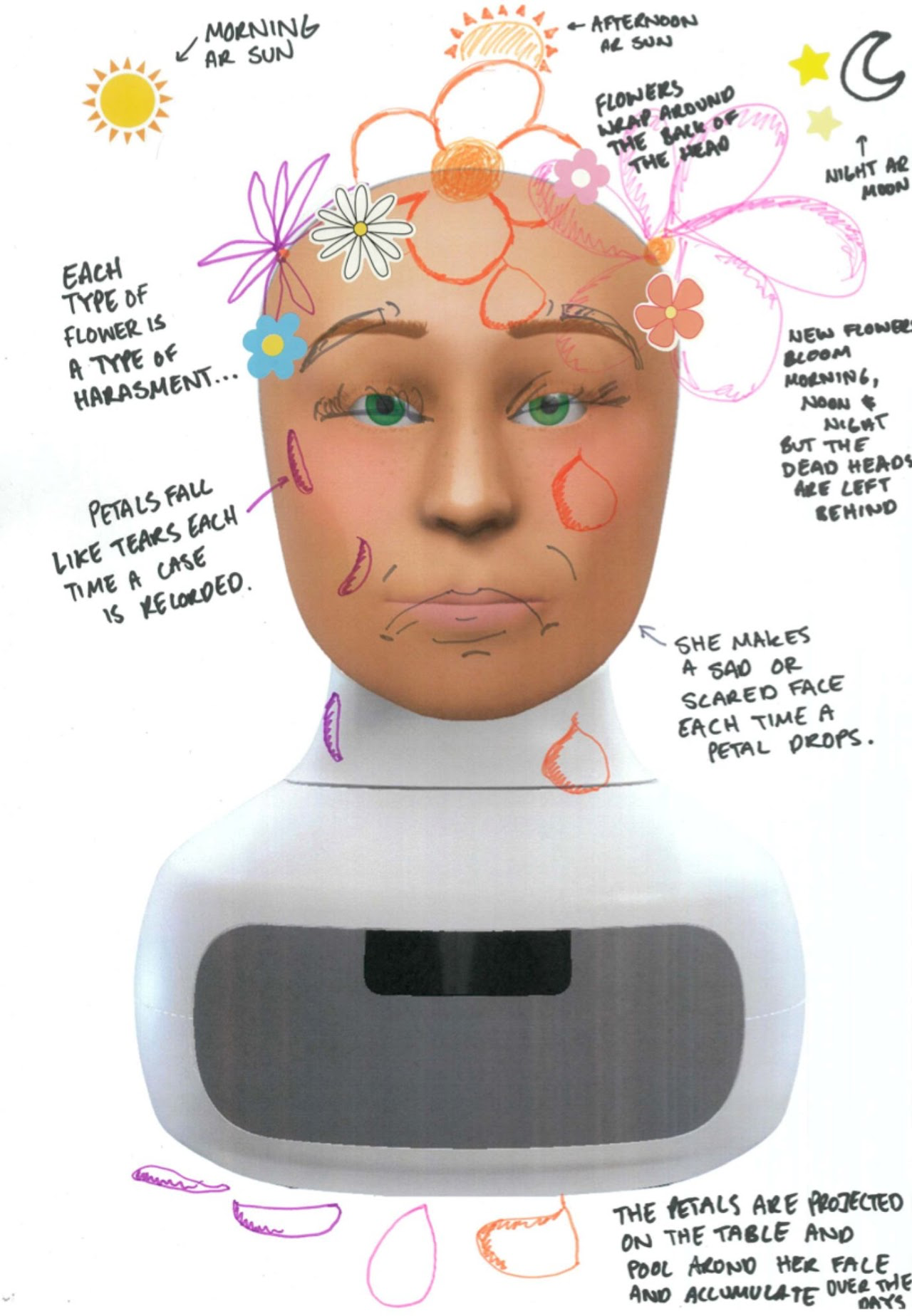

In the realm of human-robot interaction (HRI), robots are increasining generating data, used to support decision-making of to collaborate with humans. From conveying uncertainty in decision-making to leveraging expressive designs for emotional engagement, the field of robot-assisted data visualisation is exploring innovative methods to enhance transparency, trust, and collaboration. Robots, as embodied agents, not only display data but also influence human decision-making and emotional perception through their behaviours and interfaces.

Our recent studies have investigated how robots can communicate their confidence and uncertainty through graphical user interfaces (GUI) or embodied visualisations, such as motion and hesitation gestures (Schömbs et al., 2024). These visualisations support decision-making in high-stakes scenarios, such as healthcare or industrial tasks, by enhancing user trust and transparency.

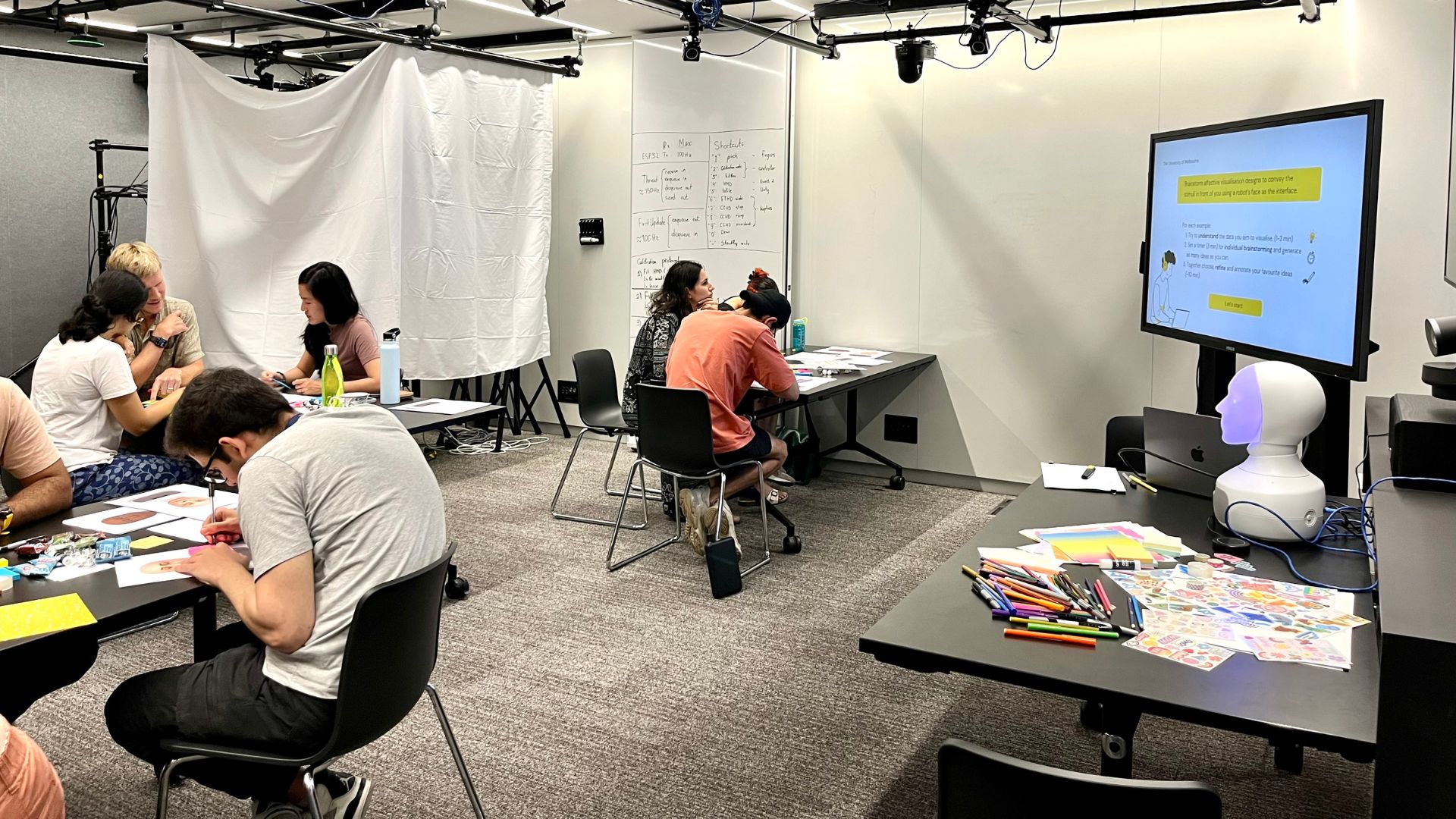

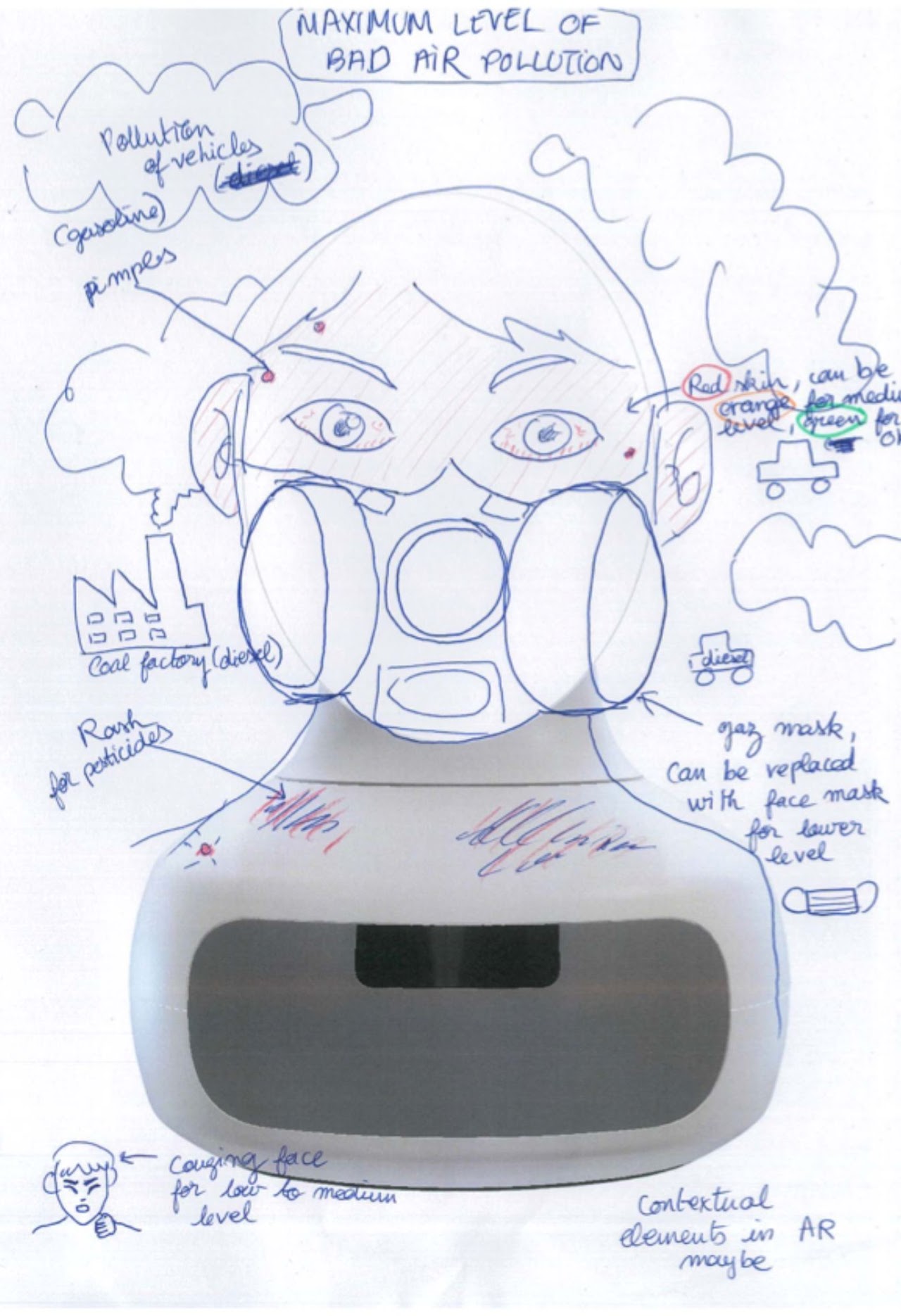

Furthermore, affective visualisations, like leveraging a robot’s face as an interface, offer an engaging approach to communicate emotions and provoke introspection by mapping data onto expressive features (Schömbs et al., 2024).

This ongoing research demonstrates how robots can transform traditional data visualisation methods by incorporating agency, adaptability, and interactivity (Schömbs et al., 2024). These efforts are pushing the boundaries of how humans interpret and interact with data in collaborative environments (Schömbs et al., 2024).

Recent Progress

- Visualising Uncertainty in Decision-Making: Recent work has explored how robots convey uncertainty and confidence levels during collaborative decision-making tasks. Through GUIs and embodied signals (e.g., motion hesitation), robots help users make more informed decisions, particularly in scenarios involving risk. For example, a study demonstrated how graphical visualisations, such as bar graphs and icon arrays, alongside embodied cues like waiting times, impact user trust and decision accuracy in packing tasks

- Affective Data Visualisation: The concept of “FaceVis” has introduced a novel way of mapping data onto a robot’s face for affective visualisations. This design uses metaphorical and expressive features, such as wrinkles, tears, and facial movements, to communicate complex data in an emotionally resonant manner. This approach has shown potential to foster engagement, empathy, and self-reflection when visualising data like environmental pollution or social issues.

For more see: https://sites.google.com/view/facevis/home?authuser=0

- Embodied Data Agency: Advances in embodied data visualisation suggest that autonomous robots can act as “data agents,” where their movements and actions embody and communicate data directly. This concept—termed “Data-Agent Interplay”—investigates how robots’ autonomy and interactivity influence human perception of data and the robots themselves